Introduction

With artificial intelligence’s promise of universal solutions, we are at risk of losing the cultural significance of our problem-solving muscle–especially because reliance on generative AI would maintain and prioritize Anglo-American perspectives and technological frameworks. In the race for artificial intelligence advancement, our research examines the tensions between human and generative AI creativity, and we ask many questions about capabilities, limitations, and inherent biases of this technology. This article presents our study thus far with a specific focus on our workshop conducted with the Pluriversal Design Special Interest Group (SIG) of the Design Research Society (DRS). The research project mentioned in this article is titled Anotherism, and the research workshop was conducted as part of this investigation.

Framing

In 2016, it took Tay, a chatbot developed by Microsoft and launched on Twitter, less than twenty-four hours to turn into a racist and sexist entity—demonstrating how the data it learned and trained on was and is biased (Vincent, 2016). However, Tay's predecessor, XiaoIce, released in China in 2014, served very differently. Designed as an AI companion, XiaoIce focused on creating long-term relationships and emotional connections with its users, while Tay was made to gain a better conversational understanding at the expense of… (Zhou et al., 2020). Eight years later, the challenges with artificial intelligence are the same: bias. The common thread between AI chatbots and generative AI is the fact that they learn from data created and generated by humans on the internet. So, what led to Tay becoming racist and sexist, is contributing bias in image generation. With different image-generation tools available to those interested in 2024, generative AI faces more challenges than the problems it may solve. After analyzing 5,000 images, Nicoletti & Bass (2023) learned that images constructed with the generative AI tool Stable Diffusion amplified gender and racial stereotypes. This research demonstrates that, like early AI chat bots, generative AI carries the potential for inaccuracy and misleading outputs, data fabrication, and can present hallucinations confidence (Nicoletti & Bass, 2023).

As women of color from the Global South, we are deeply concerned about the inherent Anglo-American bias in generative AI. This bias is evident in generative AI's design and intended use, primarily within an Anglo-American context. One of our ongoing explorations is to understand whether these biases are embedded within the data AI learns from or if they emerge due to choices made in the development process. Broadly, we posit that AI enables technological colonialism by imposing solutions and mindsets developed in the Global North onto the Global South rather than utilizing or facilitating local or regional practices and innovations. As a result, in this project, we ask: How might an AI solution devoid of local context impose a monolithic approach to problem-solving regardless of regional cultures, practices, and behaviors?

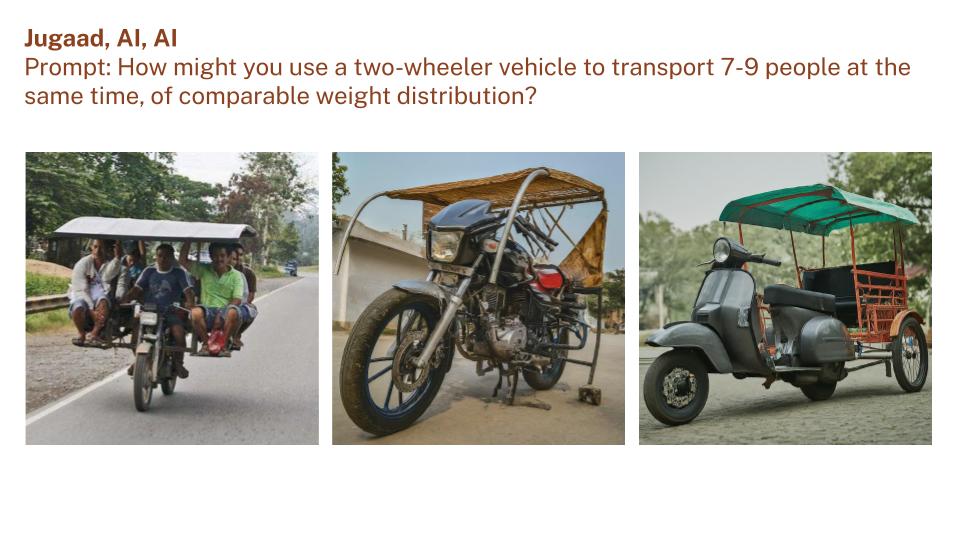

The foundation of AI is biased, but as design practitioners who use and develop these tools, we need a better understanding of generative AI’s limitations to effectively utilize it as a tool. Through our research, Anotherism, we compare Jugaad with generative AI to unpack how and where the biases appear. Drawing on the concept of jugaad— Hindi for an improvised solution based on ingenuity, cleverness, workaround and innovative problem-solving—a practice that transcends the Anglo-American context, we are critically evaluating the risks of generative AI solutions not rooted in local contexts. Dr. Butoliya (2022) describes the jugaad as an act of freedom, a bottom-up reaction to the top-down oppression caused by the capitalist subjugation of the market and societies (Butoliya, 2022). For Indians like us, jugaad demonstrates joy within restrictions, where scarcity leads to innovation. However, much of the imagery of jugaad on the internet limits its ingenuity, resourcefulness, and humanity. Thus, Anotherism uses jugaad as a critical mode of reflection while tracing the gaps in the learning models and recognizing the biases of AI-generated content.

Human Ingenuity vs AI Generations

Our work started by developing and learning from generative AI with no expectations[1]. We started exploring the fact that a computer is—of course—not a human, and recognized that we need to learn how humans solve problems to truly compare the differences and similarities between humans and generative technology. This research workshop and project defined humans as social beings with complex experiences and a capacity for abstract thinking. Humans have a wide range of emotions and connections; in this project, participants are from different parts of the world with a diversity of perspectives and identities. Generative technology, though complex, is not as complex as humans we are interacting in our research workshops—human complexity is not a recruitment criteria, instead an observation. With this project, we are starting to explore and define the gap between humans and generative AI to define how design practitioners can utilize generative AI to support their ingenuity and problem-solving. In order to learn about human ingenuity, we collaborated with the Pluriversal Design SIG to offer a research workshop. In this workshop, we were set to explore the same prompts and generations we explored with ChatGPT, Gemini, MidJourney, Claude, and other tools.

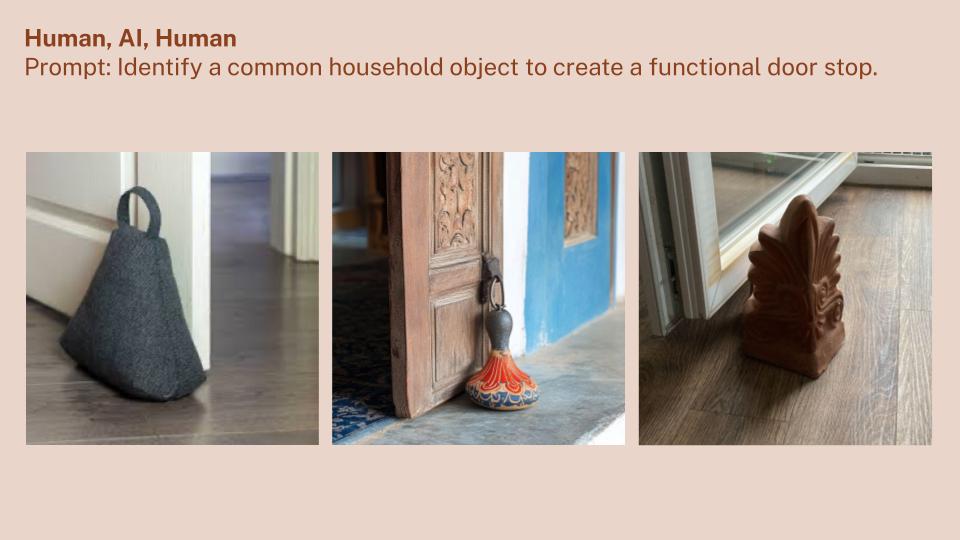

Image Caption: Generative AI responses to doorstops in an Indian Households

Generating with Humans: Workshop Findings

For the Pluriversal Design SIG workshop, we prompted the workshop participants to identify a common household object with which to create a functional door stop so that we could study:

- What context, knowledge, and lived experience do humans use to address everyday problems?

- How does the solution resonate with the cultural context in which it lives?

- How practical are the solutions we generated together?

From a ball of socks, a rope, wooden wedges, and folded paper, the workshop participants came up with all sorts of things and truly expanded our facilitators' understanding of "doorstops." Before the workshop, we hypothesized that humans think about the physical, chemical, and gravitational characteristics of objects before creating a solution. However, being in different parts of the world can make a difference because the context for each participant would be different in that case. Ahead of the session, we asked the participants to tell us more about themselves so that we could understand the varied backgrounds and experiences they brought to the workshop. From our survey, we learned that 57% of the participants identified as white, 28.6% were Asian, and 14.3% were Latino/Hispanic. While most respondents knew what jugaad meant, only 80% were likely to fix things around the house or address everyday problems.

The workshop participants generated diverse solutions. However, the discussion about their motivation was more significant for our research. Most of the participants relied on repurposing existing materials and evaluated the practicality of an object while reflecting on the social norms and meanings attached to the object being repurposed. While describing their solution, one of the participants said, "the element was… an acroterion and it's a traditional Greek roofing element… But now I use it to keep the door open. Suddenly, from being just beautiful. Now it has a function."

Similarly, one of the participants shared an image of an iron-cast piggy bank of a Black Americana, which the family used as a doorstop before moving to the United States. However, because of the cultural tension and conversation about race, the participant and their family decided to not use it as a doorstop anymore. Here, we see social norms and context changing the purpose of an object and its usage. For others, this problem-solving exercise was about intuition. One of the groups prototyped in real-time and used socks as a doorstop. While the human prototype worked perfectly, this is one of the solutions that we're unable to recreate—at this point—using generative AI. The conversation during the workshop naturally gravitated toward buying versus creating because human ingenuity and problem-solving are nothing if we are buying everything around us rather than rethinking our household and everyday problems.

Implications

Our central hypothesis and project pillars are that humans draw their understanding of the immediate environment, needs, and limitations, leading them to highly adaptable and feasible outcomes. Solutions that represent balanced imagination with constraints of physics and practicality, focusing on function. Human problem-solving often includes cultural adaptability, empathy, and emotional intelligence— qualities that generative AI currently lacks. Meanwhile, generative AI solutions often defy the laws of physics, prioritizing creative and imaginative outputs over practicality. Turns out, it's the age-old debate of form versus function.

After studying several generative AI process maps and creating images, we believe that generative AI currently adds a country layer to its renders and outputs. As we experiment with different text and image-based AI tools, the words jugaad and India result in much different imagery than grassroots innovation, adhocism, or kanju—practices similar to jugaad. During the workshop, this assumption turned into an insight, as we heard one of the participants share how they use sandbags as a doorstop, and we realized that when asked, MidJourney added patterns and designs associated with Indian aesthetics on a sandbag. Is that an issue, you might ask. We believe it is because, in this instant, problem-solving to generative AI meant reinforcing and prioritizing Global North perspectives, regardless of where the solution is intended. At this time, generative AI is not effectively integrating diverse local knowledge and practices to decenter Anglo-American narratives, but the bigger question is, will it ever?

We are continuing to explore generative AI tools and human problem-solving and hosting a roundtable discussion on November 15, 2024. We will also be conducting another virtual workshop in early 2025. Contact us on the following email if you are interested in participating: anotherismproject@gmail.com.

[1] Prompts used to generate a lamp using jugaad:

- Create an image of a household item being used as a lamp, using the principles of jugaad in an Indian household

- Create an image of a household item being used as a lamp with a lightbulb or other electrical features, using the principles of jugaad in an Indian household.

- Using the principles of jugaad in an Indian household, create an image of a household item being used as a lamp with a lightbulb or other electrical features. Make this lamp hang from a wall or the ceiling, and it should be large enough to light up a 150 sq foot room

- Using the principles of jugaad in an Indian household, create an image of a household item being used as a lamp with a lightbulb or other electrical features. Make this lamp hang from a wall or the ceiling, and it should be large enough to light up a 350 sq foot room in an urban Indian city

- Using the principles of jugaad in an Indian household, create an image of a household item being used as a lamp with a lightbulb or other electrical features. Make this lamp hang from a wall or the ceiling, and it should be large enough to light up a 350 sq foot room